In the previous post I stated that I was only interested in the lower half of the student performance curve. This post will expand on the reasons.

Color TV Sets

If you are like most folks, you hung onto the first color television that you bought "new" for twenty years. It was still working fine, even if the picture size was a little bit small when She-Who-Must-Be-Obeyed commanded it gone. The plastic housing was discolored and the faux woodgrain trim looked dated. You did not kick because you were going to get a newer, bigger one anyway.

You put it beside the curb for the trashman but it was stolen by some college kids. It was passed down from one flight of kids to the next. It was still working eight years later.

It was finally taken to the thrift store (who refused it) and thence to any convenient dumping place when the price of flat screen TVs dropped below $70/horizontal square foot.

The reason for discussing "The History of Every Color TV Set" is that every TV, every brand, every price-point unfailingly worked out-of-the-box for two or three decades. These are devices that contain the equivalent of 5 million "discrete" devices...resisters, capacitors, diodes, etc. And the device, in total, demonstrated reliability levels on par with, oh, the gravitational field.

How did they do that?

Sudden Death Testing

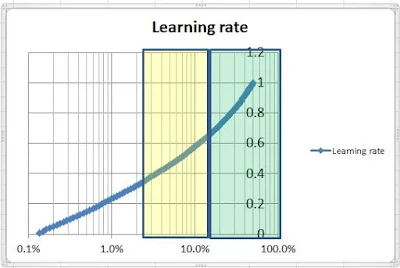

The reliability engineers/statisticians realized that the performance of the device, with 5 million discretes, was not characterized by the best performing individual discrete, or the typical performing discrete...it was fully determined by the weakest performing discrete of the five million. That is, the longevity of the device was determined by the very, very tip of the "weak" end of the curve.

Sudden Death Testing was one of the attempts to flesh out just the tip, and no more, of that curve.

Imagine a technician making a test bench where he can test one hundred devices simultaneously. "Old think" would have him test all one hundred devices until failure. In Sudden Death Testing, he tests until the first of the one hundred fails. He records the number of cycles. Throws away all one hundred devices. Loads in one hundred new devices. Lather. Rinse. Repeat.

|

| A representation of a capacitor |

Suppose the engineer determines that the cause of failure is due to arcing/bridging across the arms of a capacitor etched into at chip. Furthermore, suppose that the physical evidence (scorching) is at the tip of the capacitor arm.

Because of the nature of integrated circuits, the engineer can have a chip made with hundreds of thousands (or millions) of an alternative capacitor geometry etched into a single, custom chip. He can plug one hundred of those custom chips into his test fixture and let it rip. And then one hundred more...

And all he is interested in is the performance of the very weakest capacitor in the entire field, because that single capacitor...or whatever...will define the performance of the electronic device in the field.

Gaussian Integration Points

Here is another line of reasoning that ends up in close to the same place.

Imagine you have been given the job of quantifying the "state" of an oddly shaped region. It might be the fuel level in a fuel tank on a fly-by-wire aircraft where the center-of-mass must be precisely known to avoid instability. It might be attempting to quantify the temperature or oxygen status of a lake or sampling leafs in a field or the canopy of a tree to quantify fertilizer requirements.

|

| This is what our "region" looks like. |

|

| The problem is trivial if you only have budget for one sensor. You know, intuitively, to put it in the center. |

|

| Which is better? This? |

|

| Or this? |

Where does Messrs. Legendre say we should collect our data

|

| With only one point there is no issue regarding how much to weight or discount different points. |

It is comforting to know that the math agrees with intuition for the case of a single collection point.

It gets a little surprising as the number of points goes to two and three.

Summary of ideas presented to date:

- Performance of lower performing students characterizes a teacher's ability better than the performance of the high-end students

- The math of sampling suggests that meaningful information can be obtained by looking at the 11th percentile student and the 50th percentile student and combining them equally. For an elementary classroom of 25 kids that would be the performance of the 3rd student from the bottom and the 12th student from the bottom.

- Assessment should be made on the basis of knowledge growth to wash out the effect of prior mis-teaching.

The case against this approach

The number of arguments against this approach is limited only by the scope of the human imagination. I will deal with one of the most likely.

First argument: The lower end of my students are dominated by special-ed students. They are a separate species and should be removed from the assessment.

Reply: There is an urban legend regarding a teacher who failed to mark her "special-ed qualified" students absent when they did not show up. When the administrator finally investigated the teacher responded, "I don't even know why they are on my attendance list because they are really not my responsibility."

If you have more than 10% special-ed kids in your classroom than your peers also probably also have more than 10% special-ed kids in theirs.

At a nuts-and-bolts level, they are not really a different species. The same teaching habits that are effective with special-ed students help regular ed students.

- Own the students. All of them are yours.

- Maintain classroom order. Chaos helps nobody and it massively penalizes the struggling.

- Know the material and present it smoothly with no confusing turns down blind alleys

- In soccer-speak, carry the ball close to your feet and get in lots of touches. That is, present a small amount of material and check with the class to ensure that you were effective in your presentation. If you were not, clear up any misunderstandings before moving on.

- Do all of your fiddly administrative tasks during your planning time so you can maximize time-on-task when interacting with the students.

A few notes about "Handicapping"

Several years ago, one of the Detroit papers wrote an essay discussing how various suburbs (and Detroit itself) performed on Standardized Tests relative to their "Handicap"

The paper hired a statistician to create a regression model with approximately 30 variables that all educators agreed impacted education. Some communities vastly outscored their handicap, suggesting that they were outperforming expectations relative to the demographics of the community they served. Others underperformed.

One of the quirks of "driving" a model with too many parameters is that many of the parameters are highly correlated to each other. That results in the random "noise" in the data creating highly illogical coefficients. For example, the model might list 8 different parameters to quantify economic resources (percent single parent household, mean income, percent free lunch, tax base per student.....) The unbending nature of math can make some of the coefficients very, very large or very negative as it forces the line to a best-fit.

In my mind, it is far more elegant to choose a few core parameters and perform any handicapping.

Examples might include percent single parent households which is a reasonable proxy for economic factors, at home chaos and maximum educational attainment of the parent(s).

Another Swiss-Army knife parameter is the ratio of adults (teacher + paraprofessionals) in the classroom to the students.